The University of Massachusetts Amherst-based U.S. COVID-19 Forecast Hub, a collaborative research consortium, has generated the most consistently accurate predictions of pandemic deaths at the state and national level, according to a paper published April 8 in the Proceedings of the National Academy of Sciences. Every week since early April 2020, this international effort has produced a multi-model ensemble forecast of short-term COVID-19 trends in the U.S.

The COVID-19 pandemic has highlighted the vital role that collaboration and coordination among public health agencies, academic teams and industry partners can play in developing modern modeling capabilities to support local, state and federal responses to infectious disease outbreaks.

“Anticipating outbreak change is critical for optimal resource allocation and response,” says lead author Estee Cramer, a UMass Amherst Ph.D. epidemiology candidate in the School of Public Health and Health Sciences. “These forecasting models provide specific, quantitative and evaluable predictions that inform short-term decisions, such as healthcare staffing needs, school closures and allocation of medical supplies.”

An unprecedented global cooperative effort, the Forecast Hub represents the largest infectious disease prediction project ever conducted. The ensemble research includes just under 300 authors affiliated with 85 groups, including U.S. governmental agencies such as the Centers for Disease Control and Prevention (CDC); universities in the U.S., Canada, China, England, France and Germany; and scientific industry partners in the U.S. and India. The authors also include independent data analysts with no affiliation, such as Youyang Gu, who took the internet by storm with his early successful modeling efforts of the pandemic.

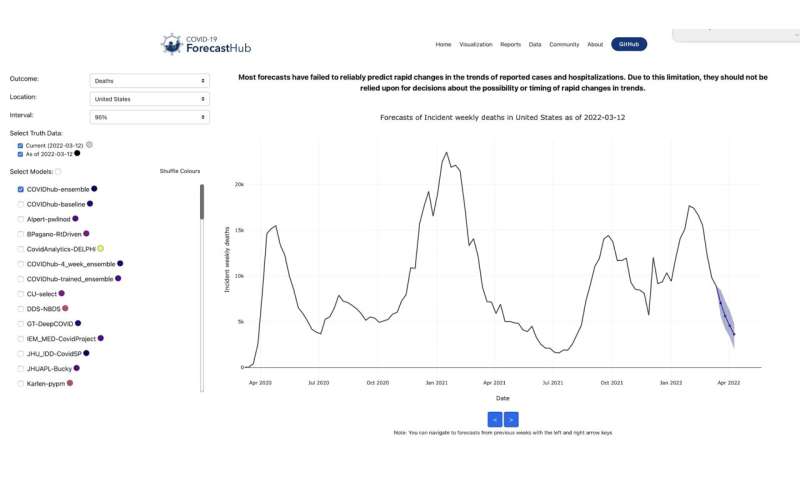

The Forecast Hub is directed by Nicholas Reich and Evan Ray, faculty in the UMass School of Public Health and Health Sciences. “It has been an incredible experience to collaborate directly with so many talented and motivated groups to build this ensemble forecast,” says Reich, a biostatistician and the senior author of the paper. “In addition to the operational aspect of the Hub, where the forecasts have been used by CDC every week for the last two years, this paper shows how we can use these data, collected in real-time across the entire pandemic, to better understand which modeling approaches worked and which did not, and why. It’s going to take many years to unpack all of the lessons of the last few years. In some ways, this is just the beginning.”

In April 2020, the CDC partnered with the Reich Lab to create the COVID-19 Forecast Hub and fund it. At this time, the Hub began collecting, disseminating and synthesizing specific predictions from different academic, industry and independent research groups. The effort grew rapidly, and in its first two years the U.S. Forecast Hub collected over half a billion rows of forecast data from nearly 100 research groups. The CDC uses the Hub’s weekly forecast in official public communications about the pandemic.

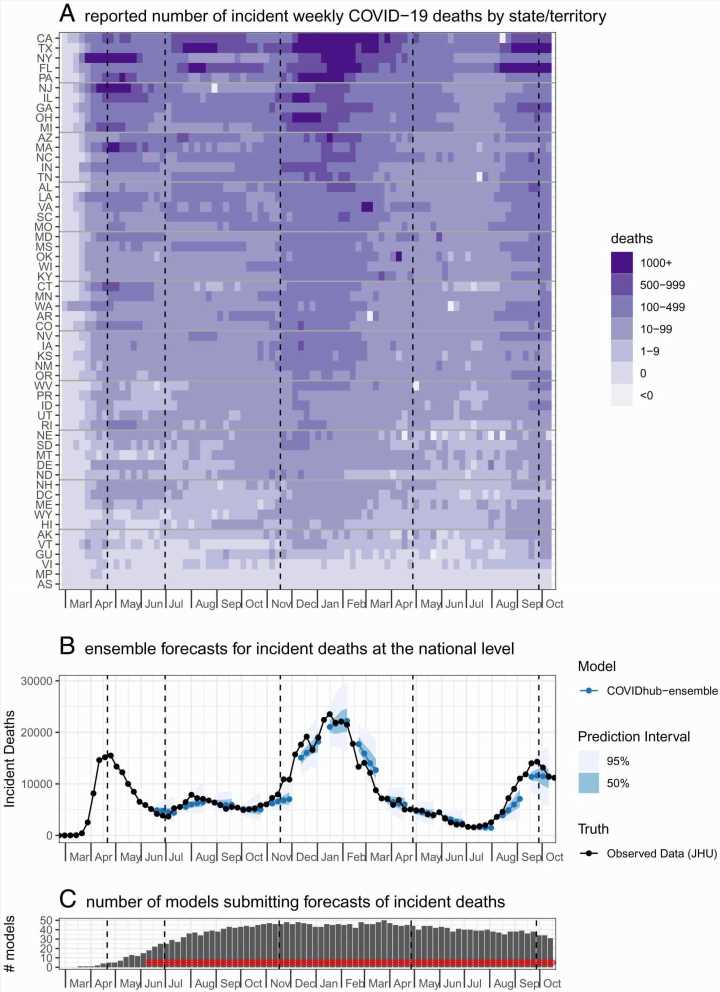

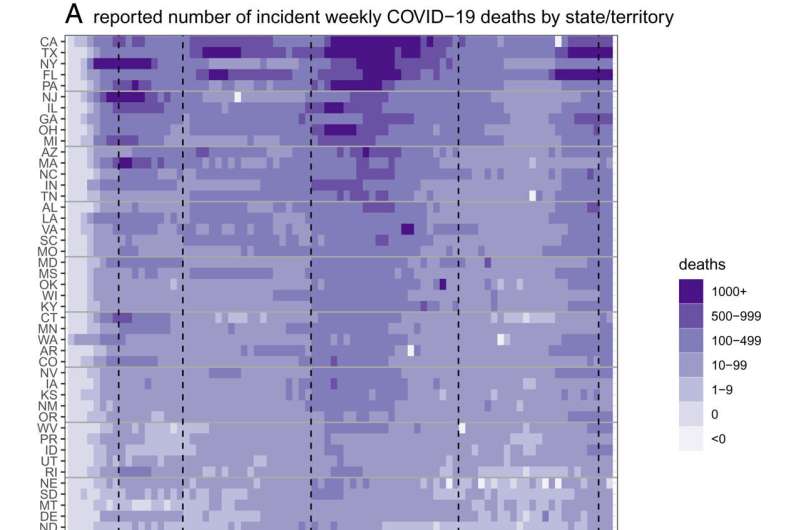

The paper compared the accuracy of short-term forecasts of U.S.-based COVID-19 deaths during the first year and a half of the pandemic. The 27 individual models that submitted forecasts consistently during that period showed high variation in accuracy across time, locations and forecast horizons. The ensemble model that combined individual forecasts was more consistently accurate than those individual forecasts.

“This project demonstrates the importance of diversity in modeling approaches and modeling assumptions,” Cramer says. “Including a variety of models in the ensemble contributes to its robustness and ability to overcome individual model biases. This is a really important consideration for public health agencies when using forecasts to inform policies during an outbreak of any size.”

The Forecast Hub ensemble was the only model that ranked in the top half of all models for more than 85% of the forecasts it made, that had better overall accuracy than the baseline forecast in every location and that had better overall four-week-ahead accuracy than the baseline forecast in every week.

All the forecasts, including those of the ensemble model, made less consistent and less accurate forecasts during the four waves of the pandemic that occurred during the study period: the summer 2020 wave in the South and Southwest, the late fall 2020 rise in deaths in the upper Midwest, the spring 2021 Alpha variant wave in Michigan and the nationwide Delta variant wave in the summer of 2021. “Models in general systematically underpredicted the mortality curve as trends were rising and overpredicted as trends were falling,” the paper states.

Forecasts became less accurate as models made longer term predictions. Probabilistic error at a 20-week horizon was three to five times larger than when predicting a one-week horizon. This resulted from underestimating the possibility of future increases in cases, the paper concludes. “Because many of us interact with weather forecasts almost every day on our phones, we know not to trust the daily precipitation forecasts much past a two-week horizon,” Reich says. “But we don’t have the same intuition yet as a society about infectious disease forecasts. This work shows that the accuracy of forecasts for deaths is pretty good for the next four weeks, but at horizons of six weeks or more, the accuracy is typically substantially worse.”

Source: Read Full Article