A new study shows that many UK radiographers have limited understanding of how new smart computer systems diagnose problems found on scans such as X-rays, MRI and CT scans. “Artificial Intelligence (AI) is on the verge being more widely introduced into X-ray departments. This research shows we need to educate radiographers so that they can be sure of diagnosis, and know how to discuss the role of AI in radiology with patients and other healthcare practitioners,” said lead researcher Clare Rainey.

Radiographers are the specialists who patients meet at the time of the scan. They are trained to recognise the variety of problems found on medical scans, such as broken bones, joint problems, and tumours, and are traditionally considered to bridge the gap between the patient and technology. There is a severe national shortage of radiographers and radiologists, and the NHS is about to introduce AI systems to help aid diagnosis. Now a study presented at the UK Imaging and Oncology Conference in Liverpool (with simultaneous peer-reviewed publication—see below) suggests that, despite impressive performances reported by developers of AI systems, many radiographers are unsure how these new smart systems work.

Clare Rainey and Dr. Sonyia McFadden from Ulster University surveyed Reporting Radiographers on their understanding of how AI worked (a “Reporting Radiographer” provides formal reports on X-ray images). Of the 86 radiographers surveyed, 53 (62%) said they were confident in how an AI system reaches its decision. However, less than a third of respondents would be confident communicating the AI decision to stakeholders, including patients, carers and other healthcare practitioners.

The study also found that if the AI confirmed their diagnosis then 57% of respondents would have more overall confidence in the finding, however, if the AI disagreed with their opinion then 70% would seek an additional opinion.

Clare Rainey said, “This survey highlights issues with UK reporting radiographers’ perceptions of AI used for image interpretation. There is no doubt that the introduction of AI represents a real step forward, but this shows we need resources to go into radiography education to ensure that we can make the best use of this technology. Patients need to have confidence in how the radiologist or radiographer arrives at an opinion.”

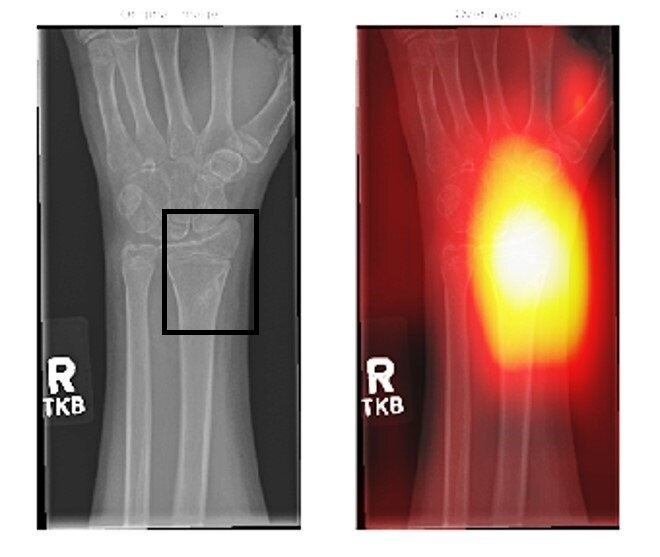

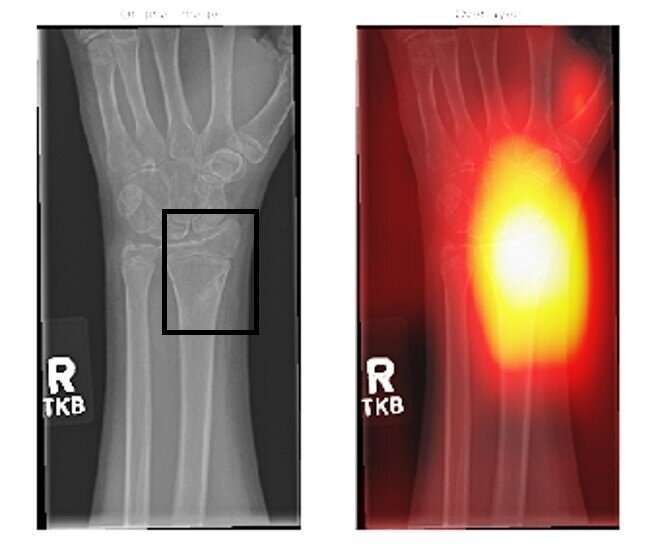

Modern forms of AI, where computer-based systems learn as they go along, are appearing in many places in everyday life, from self-learning robots in factories to self-driving cars and self-landing aircraft. Now the NHS is preparing to introduce these learning systems to their imaging services, such as X-rays and MRIs. It is not expected that these computerised systems will replace the final judgment of a skilled radiographer, however they may offer a high level first, or second opinion on X-ray findings. This will help reduce time needed for diagnosis and treatment, as well as well as giving a ‘belt and braces’ backup to human decision.

Clare Rainey said, “It’s not strictly necessary for radiographers to understand everything about how these AI systems work; after all, I don’t understand how my TV or smartphone works, but I know how to use them. However, they do need to understand how the system makes the choices it does, so that they can both decide whether to accept the findings, and be able to explain these choices to patients.”

As Clare Rainey is unable to travel to Liverpool, this work is presented at the UKIO by Dr. Nick Woznitza. Dr. Woznitza said, “AI is really a range of techniques, which can have exciting impact on what scans can tell us. My own group is working on how AI is applied to lung scans, which has the potential to help with diagnosing conditions form lung cancer to COVID.”

Source: Read Full Article